The Unfetter project is a joint effort between The MITRE Corporation and the United States National Security Agency (NSA).

Unfetter is based on MITRE’s Adversarial Tactics, Techniques, and Common Knowledge (ATT&CK) threat model, the associated Cyber Analytics Repository (CAR), and a graphical user interface known as the Cyber Analytic Repository Exploration Tool (CARET) that connects CAR and ATT&CK. Information summarizing ATT&CK, CAR, and CARET may be found below.

Contemporary detection methodologies focus on extensive use of anti-virus, commercial threat intelligence or other threat intelligence sharing products, and indicators of compromise (IOCs). Unfortunately, these focus areas are not a panacea for the defender against the adversary. Despite defender use, adversaries who are able to bypass or overcome these common defenses typically encounter a minimally protected network at the host level once inside. Anti-virus can be defeated by adversaries testing their malware in advance against popular security products or malware databases, ensuring the ability to overcome detection. Commercial threat intelligence products identify only a subset of adversary techniques and are only as good as the quality of information available. IOCs judge the presence of malicious activity by detecting known bad objects on a computer; however, objects only identify past adversaries’ activities. Objects are useful to identify individual adversaries from past compromises and behaviors, but they are not useful to detect unknown actors that may eventually focus their efforts on compromising networks in the future. In the real world, defenders don’t track objects, they track behaviors.

A paradigm shift is required to secure enterprises more effectively. This shift accepts that today’s adversaries are able to penetrate the network boundary despite our current technologies and accepts the need for additional host-based technologies and hyper-sensing capabilities. In addition, it accepts that we must move from IOCs to indicators of attack (IOAs) to understand the behaviors of the adversary in order to detect the unknown future actor.

This paradigm shift to IOAs introduces a subtle difference in how sensors must collect data. IOAs describe both actors and atomic actions; therefore, IOA detection can only be performed on data that is collected by capturing actions as they are performed. Some call this kind of collection a real-time sensor; however, the term “real-time” has been misused to the point of being meaningless in the context of endpoint sensing. Scanning is not adequate because a scan observes the present state of objects, capturing neither actions nor actors. Although in some cases an action may be inferred from the observation of objects on a system (a new file exists; therefore, someone must have created it) or the system may cache actors and actions (a running process tree), these pictures are incomplete because they either cannot capture the actor, or they are prone to missing actions that occur between scans. This situation cannot be fixed by simply scanning at more frequent intervals, because even “continuous” scanning cannot capture every atomic action: to do so would require a scan after every single operation on the computer, because a scan only captures discrete points in time, not a continuous stream. Trying to collect IOAs by scanning misses the myriad actions that happen on the system between scans. For this reason, sensors that seek to detect IOAs must collect data in real time. Conversely, any sensor that only scans is blind to IOAs.

This shift from scanning to real-time detection, from IOC to IOA, is powerful because real-time detection also provides the opportunity for prevention. If a real-time sensor captures an action while it is happening and determines the action is bad, it can prevent the bad action from occurring, something that scanning systems cannot do.

MITRE’s threat-based approach to network defense is guided by five key principles:

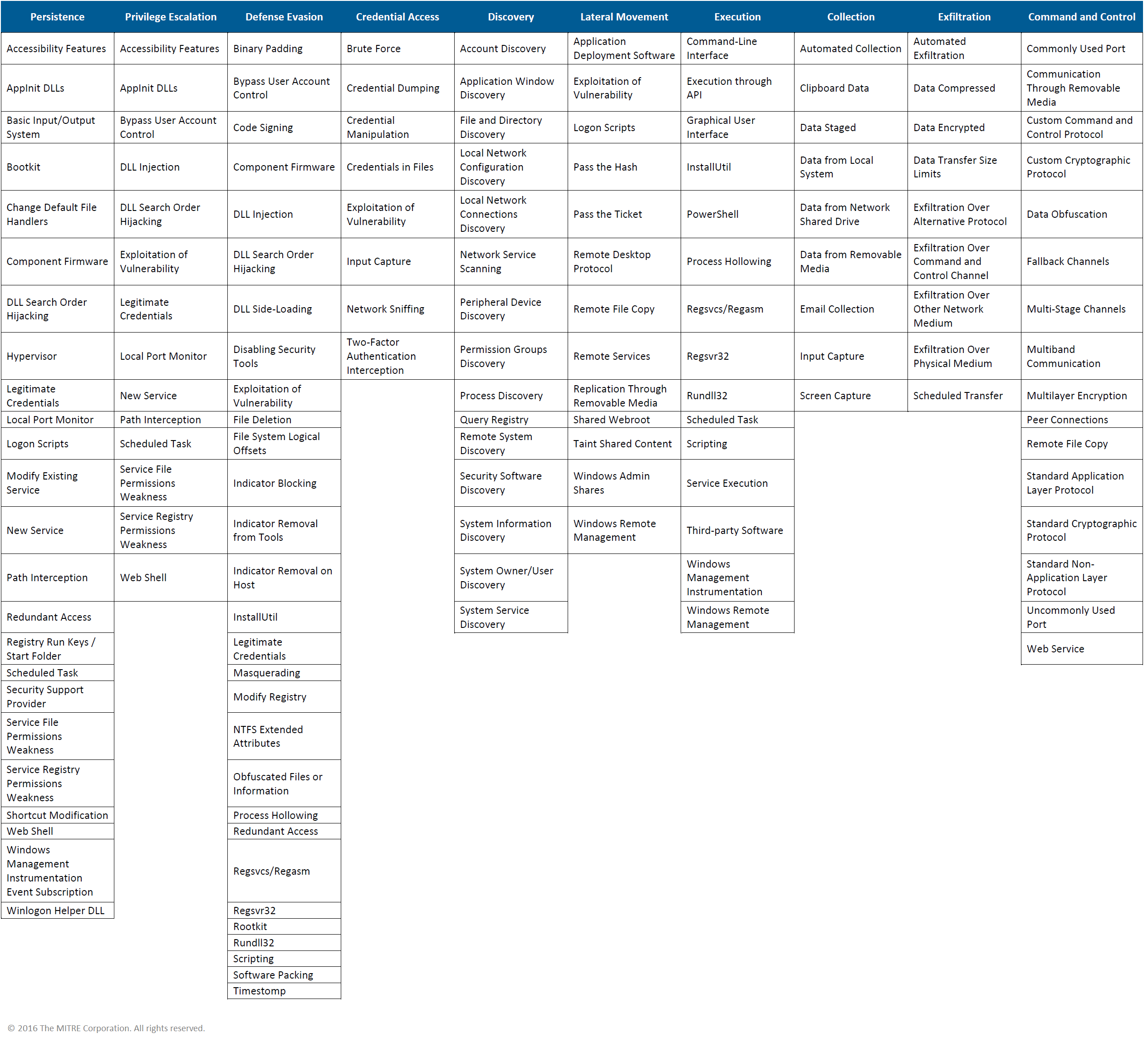

Adversarial Tactics, Techniques, and Common Knowledge (ATT&CK) is a model and framework for describing the actions an adversary may take while operating within an enterprise network. The model can be used to better characterize and describe post-compromise adversary behavior. It both expands the knowledge of network defenders and assists in prioritizing network defense by detailing the post-compromise (post-exploit and successful access) tactics, techniques, and procedures (TTPs) advanced persistent threats use to execute their objectives while operating inside a network.

ATT&CK incorporates information on cyber adversaries gathered through MITRE research, as well as from other disciplines such as penetration testing and red teaming, to establish a collection of knowledge characterizing the post-compromise activities of adversaries. While there is significant research on initial exploitation and use of perimeter defenses, there is a gap in foundational knowledge of adversary process after initial access has been gained. ATT&CK focuses on TTPs adversaries use to make decisions, expand access, and execute their objectives. It aims to describe an adversary’s steps at a high enough level to be applied widely across platforms, but still maintain enough details to be technically useful.

The ten tactic categories for ATT&CK were derived from the later stages (control, maintain, and execute) of the seven-stage Cyber Attack Lifecycle (first articulated by Lockheed Martin as the Cyber Kill Chain®). ATT&CK provides a deeper level of granularity in describing what can occur during an intrusion after an adversary has acquired access.

Each category contains a list of techniques that an adversary could use to perform that tactic. Techniques are broken down to provide a technical description, indicators, useful defensive sensor data, detection analytics, and potential mitigations. Applying intrusion data to the model then helps focus defense on the commonly used techniques across groups of activity and helps identify gaps in security. Defenders and decision makers can use the information in ATT&CK for various purposes, not just as a checklist of specific adversarial techniques.

ATT&CK is largely focused on Microsoft Windows enterprise networks for individual technique details. The framework and higher level categories may also be applied to other platforms and environments.

The ATT&CK-Based Analytics Development Method is the process MITRE used to create, evaluate, and revise analytics with the intent of detecting cyber adversary behavior. It was refined over years of experience investigating attacker behaviors, building sensors to acquire data, and analyzing data to detect adversary behavior. In describing the process, we use the terms white team, red team, and blue team, which we define as follows:

The ATT&CK-Based Analytics Development Method contains seven steps:

The Cyber Analytics Repository (CAR) is a knowledge base of analytics developed by MITRE based on the Adversarial Tactics, Techniques, and Common Knowledge (ATT&CK) model and framework. CAR uses the ATT&CK-Based Analytic Development methodology outlined above to create, evaluate, and revise analytics with the intent of detecting cyber adversary behavior. Development of an analytic is based on the following activities: identifying and prioritizing adversary behaviors to detect from the ATT&CK threat model, identifying the data necessary to detect the adversary behavior, creating a sensor to collect the data if necessary, and actually creating the analytic to detect the identified behaviors.

There are multiple ways to categorize analytics. The categories are not comprehensive. Since the analytics are derived from a threat model based on adversary behaviors some types of analytics are out of scope (e.g., signature, profile based). The following four types of analytics are identified in CAR:

Within each analytic, the following information is available:

The Cyber Analytic Repository Exploration Tool (CARET) is a proof-of-concept graphical user interface designed to connect the groups and techniques listed in ATT&CK to the analytics, data model, and sensors described in CAR. CARET is used to link adversaries, techniques, analytics, data, and sensors to develop an understanding of defensive capabilities and to aid in their development and use. In particular, CARET helps answer the following types of questions:

The Unfetter reference implementation enables the user to apply additional offered analytics and experiment with test cases to develop more robust analytics for better detection methodologies.

While Unfetter is a project designed to help network defenders, cyber security professionals, and decision makers identify and analyze defensive gaps, the overall objective is to help the community iterate, evolve, and progress toward the broader goal of achieving greater network resiliency. Unfetter is an open-source project. Cyber resiliency, as defined by the MITRE Cyber Resiliency Engineering Framework, addressed four chief goals: anticipate, withstand, recover, and evolve. Unfetter specifically impacts cyber resiliency by addressing the need to anticipate and evolve. Through gap analysis relative to detective capabilities and the threat, Unfetter provides a mechanism to anticipate an enterprise’s gaps in adversary detection. This further enables the enterprise to minimize impacts from anticipated or actual adversary attacks through smarter sensing. This advancement in sensing also enables effective decision-making and faster response times. Faster responses, implemented through automation, minimize the impact of current attacks. In addition, decision-making that utilizes learning and reasoning over time enable the system to evolve to a less vulnerable state to future attacks, especially ones similar to previous attacks. Overall, Unfetter not only impacts an enterprise’s ability to anticipate attacks, but can also support other cyber-resiliency goals, such as evolution.

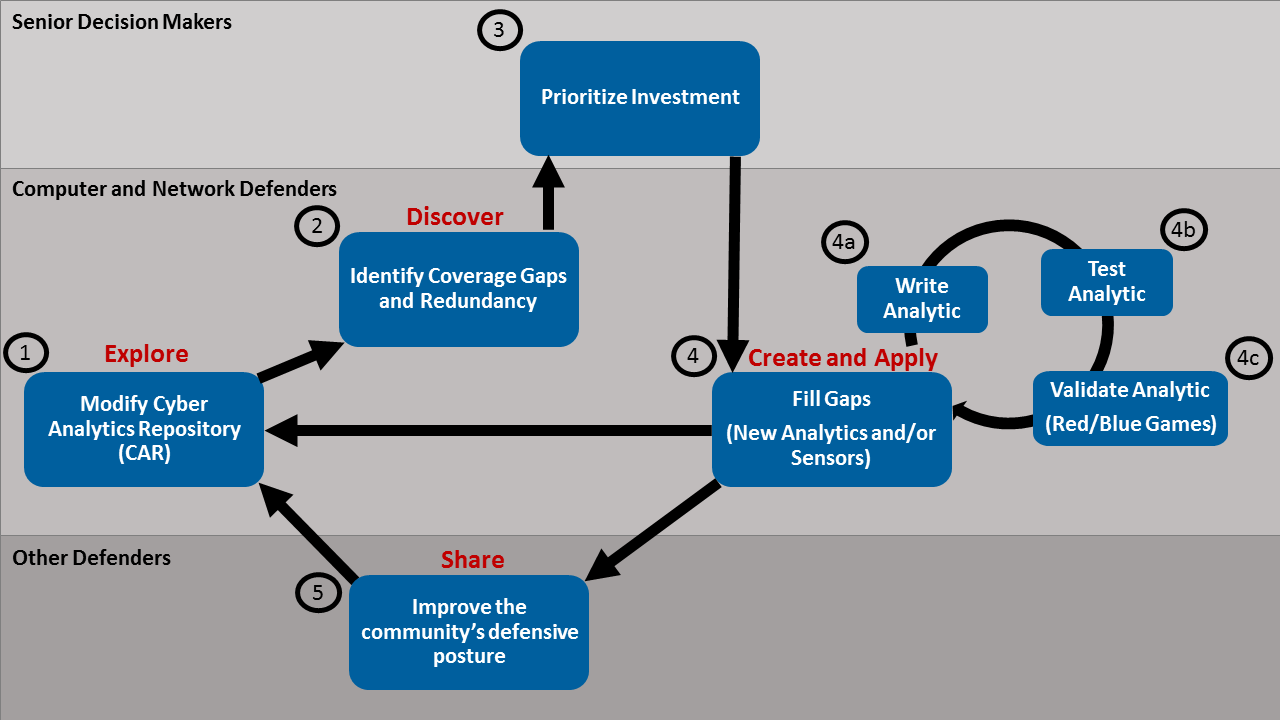

The overarching process allows you to understand how each release and the core concepts on which they are based educate and inform the community as a whole.

Use threat-based intelligence to figure out where there are gaps and redundancies within your enterprise. This is part of an iterative process:

Viewing CARET online allows you to explore the concepts of groups, techniques, analytics, data model, and sensors to understand how analytics can be used to identify adversary tradecraft. Building upon the concepts in CARET, you can discover new connections between groups, analytics, and sensors. Downloading CAR, ATT&CK, and CARET enables you to create new analytics and customize the available information for your environment. The Unfetter reference implementation allows you to experiment with analytics in a virtual environment.