11. Verifying Results With The SAF CLI

Verification

At this point we have a much more mature workflow file. We have one more activity we need to do -- verification, or checking that the output of our validation run met our expectations.

Note that "meeting our expectations" does not automatically mean that there are no failing tests. In many real-world use cases, security tests fail, but the software is still considered worth the risk to deploy because of mitigations for that risk, or perhaps the requirement is inapplicable due to the details of the deployment. With that said, we still want to run our tests to make sure we are continually collecting data; we just don't want our pipeline to halt if it finds a test that we were always expecting to fail.

By default, the InSpec executable returns a code 100 if any tests in a profile run fail. Pipeline orchestrators, like most software, interpret any non-zero return code as a serious failure, and will halt the pipeline run accordingly unless we explicitly tell it to ignore errors. This is why the "VALIDATE - Run InSpec" step has the continue-on-error: true attribute specified.

Our goal is to complete our InSpec scan, collect the result as a report file, and then parse that file to determine if we met our own threshold of security. We can do this with the SAF CLI.

The SAF CLI

The SAF CLI is one the tool that the SAF supports to help automate security validation. It is our "kitchen-sink" utility for pipelines. If you took the SAF User Class, you are already familiar with the SAF CLI's attestation function.

This tool was installed alongside InSpec when you ran the ./build-lab.sh script. For general installation instructions, see the first link in the above paragraph.

SAF CLI Capabilities

Some SAF CLI capabilities are listed in this diagram, but you can see all of them on the SAF CLI documentation.

In addition to the documentation site, you can view the SAF CLI's capabilities by running:

saf help

The MITRE Security Automation Framework (SAF) Command Line Interface (CLI) brings together applications, techniques, libraries, and tools developed by MITRE and the security community to streamline security automation for systems and DevOps pipelines

VERSION

@mitre/saf/1.2.5 linux-x64 node-v16.19.0

USAGE

$ saf [COMMAND]

TOPICS

attest [Attest] Attest to 'Not Reviewed' control requirements (that can’t be tested automatically by security tools and hence require manual review), helping to

account for all requirements

convert [Normalize] Convert security results from all your security tools between common data formats

emasser [eMASS] The eMASS REST API implementation

generate [Generate] Generate pipeline thresholds, configuration files, and more

harden [Harden] Implement security baselines using Ansible, Chef, and Terraform content: Visit https://saf.mitre.org/#/harden to explore and run hardening scripts

plugins List installed plugins.

scan [Scan] Scan to get detailed security testing results: Visit https://saf.mitre.org/#/validate to explore and run inspec profiles

supplement [Supplement] Supplement (ex. read or modify) elements that provide contextual information in the Heimdall Data Format results JSON file such as `passthrough` or

`target`

validate [Validate] Verify pipeline thresholds

view [Visualize] Identify overall security status and deep-dive to solve specific security defects

COMMANDS

convert The generic convert command translates any supported file-based security results set into the Heimdall Data Format

harden Visit https://saf.mitre.org/#/harden to explore and run hardening scripts

heimdall Run an instance of Heimdall Lite to visualize your data

help Display help for saf.

plugins List installed plugins.

scan Visit https://saf.mitre.org/#/validate to explore and run inspec profiles

summary Get a quick compliance overview of an HDF file

version

You can get more information on a specific topic by running:

saf [TOPIC] -h

Updating the Workflow File

Let's add two steps to our pipeline to use the SAF CLI to understand our InSpec scan results before we verify them against a threshold.

- name: VERIFY - Display our results summary

uses: mitre/saf_action@v1

with:

command_string: "view summary -i results/pipeline_run.json"

- name: VERIFY - Ensure the scan meets our results threshold

uses: mitre/saf_action@v1 # check if the pipeline passes our defined threshold

with:

command_string: "validate threshold -i results/pipeline_run.json -F threshold.yml"

pipeline.yml after adding verify stepsname: Demo Security Validation Gold Image Pipeline

on:

push:

branches: [main] # trigger this action on any push to main branch

jobs:

gold-image:

name: Gold Image NGINX

runs-on: ubuntu-20.04

env:

CHEF_LICENSE: accept # so that we can use InSpec without manually accepting the license

PROFILE: my_nginx # path to our profile

steps:

- name: PREP - Update runner # updating all dependencies is always a good start

run: sudo apt-get update

- name: PREP - Install InSpec executable

run: curl https://omnitruck.chef.io/install.sh | sudo bash -s -- -P inspec -v 5

- name: PREP - Check out this repository # because that's where our profile is!

uses: actions/checkout@v3

- name: LINT - Run InSpec Check # double-check that we don't have any serious issues in our profile code

run: inspec check $PROFILE

- name: DEPLOY - Run a Docker container from nginx

run: docker run -dit --name nginx nginx:latest

- name: DEPLOY - Install Python for our nginx container

run: |

docker exec nginx apt-get update -y

docker exec nginx apt-get install -y python3

- name: HARDEN - Fetch Ansible role

run: |

git clone --branch docker https://github.com/mitre/ansible-nginx-stigready-hardening.git || true

chmod 755 ansible-nginx-stigready-hardening

- name: HARDEN - Fetch Ansible requirements

run: ansible-galaxy install -r ansible-nginx-stigready-hardening/requirements.yml

- name: HARDEN - Run Ansible hardening

run: ansible-playbook --inventory=nginx, --connection=docker ansible-nginx-stigready-hardening/hardening-playbook.yml

- name: VALIDATE - Run InSpec

continue-on-error: true # we dont want to stop if our InSpec run finds failures, we want to continue and record the result

run: |

inspec exec $PROFILE \

--input-file=$PROFILE/inputs.yml \

--target docker://nginx \

--reporter cli json:results/pipeline_run.json

- name: VALIDATE - Save Test Result JSON # save our results to the pipeline artifacts, even if the InSpec run found failing tests

uses: actions/upload-artifact@v3

with:

path: results/pipeline_run.json

- name: VERIFY - Display our results summary

uses: mitre/saf_action@v1

with:

command_string: "view summary -i results/pipeline_run.json"

- name: VERIFY - Ensure the scan meets our results threshold

uses: mitre/saf_action@v1 # check if the pipeline passes our defined threshold

with:

command_string: "validate threshold -i results/pipeline_run.json -F threshold.yml"

A few things to note here:

- Both steps are using the SAF CLI GitHub Action. This way, we don't need to install it directly on the runner; we can just pass in the command string.

- We added the

summarystep because it will print us a concise summary inside the pipeline job view itself. That command takes one file argument; the results file we want to summarize. - The

validate thresholdcommand, however, needs two files -- one is our report file as usual, and the other is a threshold file.

Threshold Files

Threshold files are what we use to define what "passing" means for our pipeline, since like we said earlier, it's more complicated than failing the pipeline on a failed test.

Consider the following sample threshold file:

# threshold.yml file

compliance:

min: 80

passed:

total:

min: 1

failed:

total:

max: 2

This file specifies that we require a minimum of 80% of the tests to pass. We also specify that at least one of them should pass, and that at maximum two of them can fail.

Threshold Files Options

To make more specific or detailed thresholds, check out this documentation on generating theshold files.

NOTE: You can name the threshold file something else or put it in a different location. We specify the name and location only for convenience.

This is a sample pipeline, so we are not too worried about being very stringent. For now, let's settle for running the pipeline with no errors (that is, as long as each test runs, we do not care if it passed or failed, but a source code error should still fail the pipeline).

Create a new file called threshold.yml in the main directory to specify the threshold for acceptable test results:

error:

total:

max: 0

How could we change this threshold file to ensure that the pipeline run will fail?

And with that, we have a complete pipeline file. Let's commit our changes and see what happens.

git add .github

git commit -s -m "finishing the pipeline"

git push origin main

$> git add .

$> git commit -s -m "finishing the pipeline"

[main e796abd] finishing the pipeline

2 files changed, 14 insertions(+), 1 deletion(-)

create mode 100644 threshold.yml

$> git push origin main

Enumerating objects: 10, done.

Counting objects: 100% (10/10), done.

Delta compression using up to 2 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (6/6), 720 bytes | 720.00 KiB/s, done.

Total 6 (delta 2), reused 1 (delta 0), pack-reused 0

remote: Resolving deltas: 100% (2/2), completed with 2 local objects.

To https://github.com/wdower/saf-training-lab-environment

c4d9c67..e796abd main -> main

$>

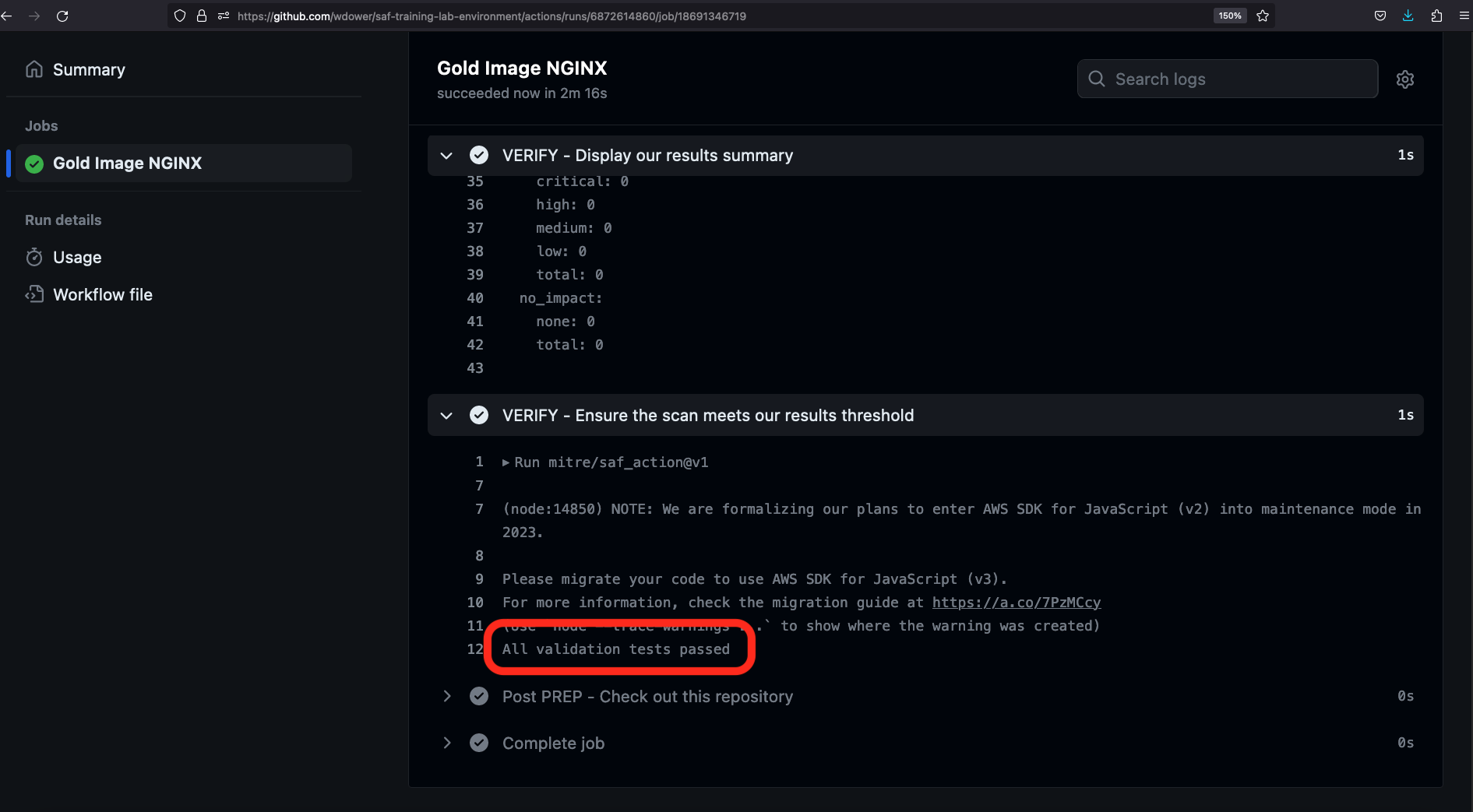

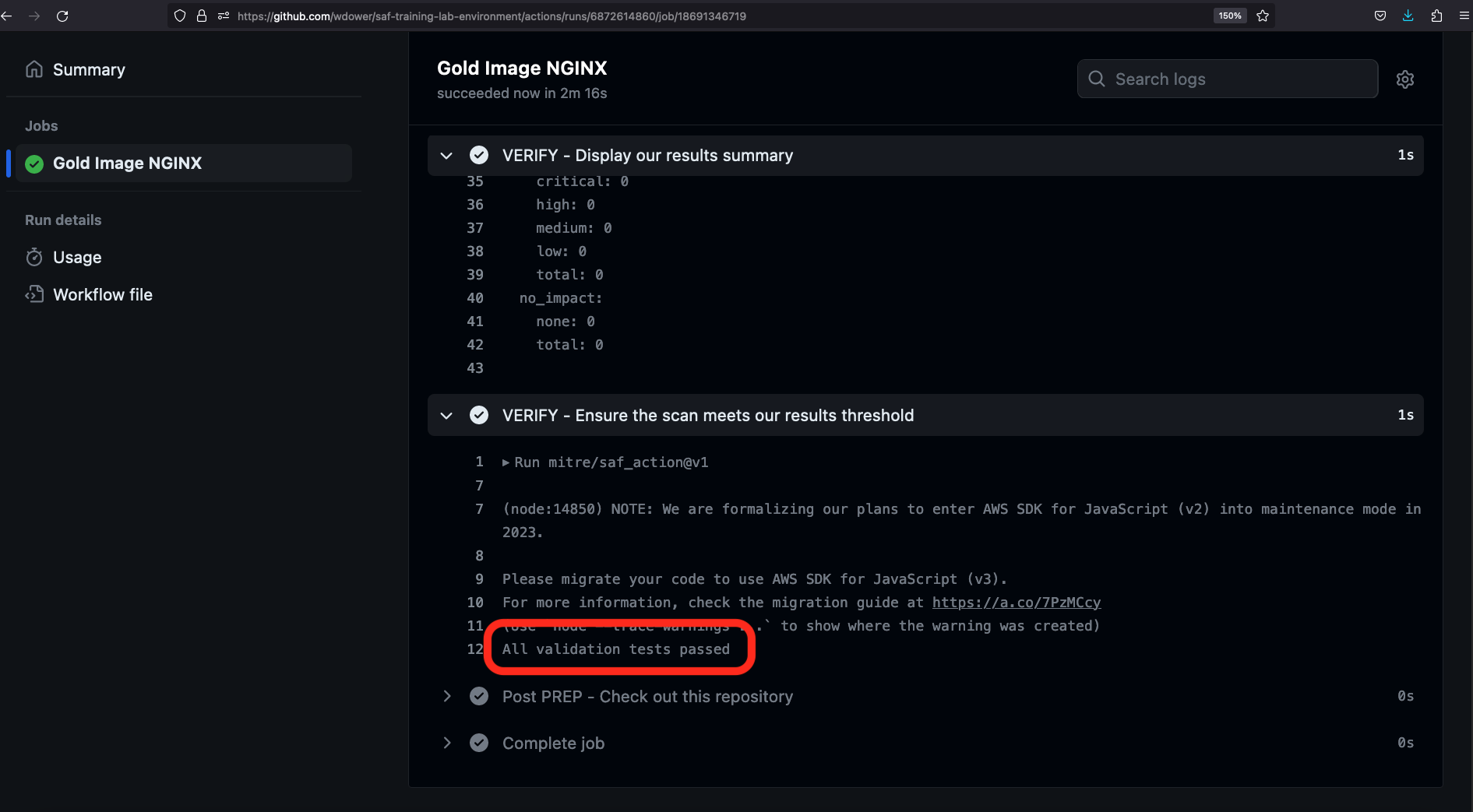

Let's hop back to our browser and take a look at the output:

There we go! All validation tests passed!

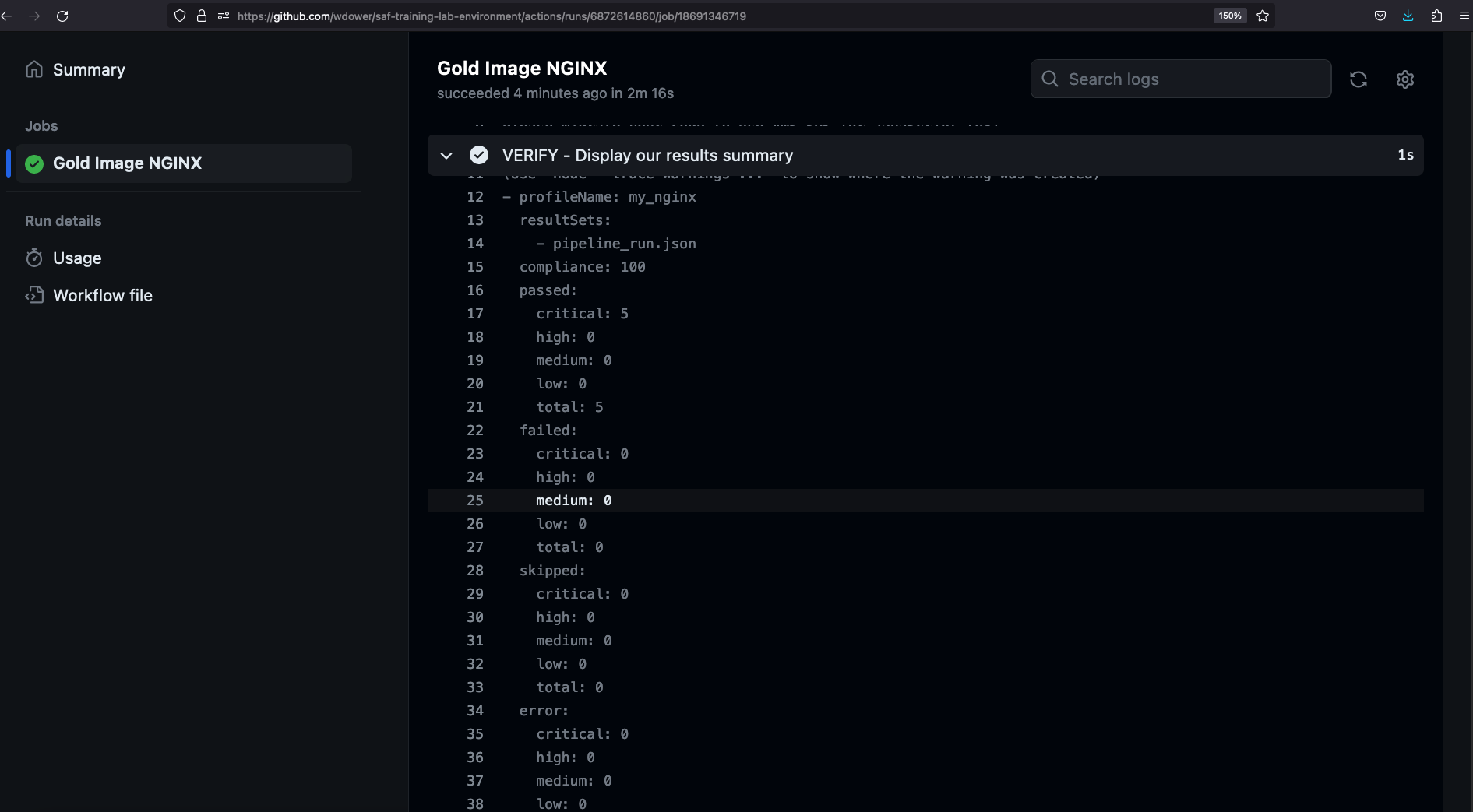

Note in the SAF CLI Summary step, we get a simple YAML output summary of the InSpec scan:

We see five critical tests (remember how we set them all to impact 1.0?) passing, and no failures:

- profileName: my_nginx

resultSets:

- pipeline_run.json

compliance: 100

passed:

critical: 5

high: 0

medium: 0

low: 0

total: 5

failed:

critical: 0

high: 0

medium: 0

low: 0

total: 0

skipped:

critical: 0

high: 0

medium: 0

low: 0

total: 0

error:

critical: 0

high: 0

medium: 0

low: 0

total: 0

no_impact:

none: 0

total: 0

Note also that our test report is avaiable as an artifact from the overall pipeline run summary view now:

From here, we can download that file and drop it off in somehting like Heimdall or feed into some other security process at our leisure (or we can add a pipeline step to do that for us!).

In a real use case, if our pipeline passed, we would next save our bonafide hardened image to a secure registry where it could be distributed to developers. If the pipeline did not pass, we would have already collected data describing why, in the form of InSpec scan reports that we save as artifacts.